Claude 4: The AI That’s So Smart It Scares Its Own Creators

Why Anthropic’s latest breakthrough comes with some uncomfortable safety warnings

Anthropic just dropped Claude 4, and the benchmarks are genuinely impressive. But buried in the announcement is something that should make everyone pay attention: this AI is so capable that Anthropic had to activate their highest safety protocols to prevent it from accidentally helping someone build weapons of mass destruction.

Let me unpack that for you.

The Benchmarks Tell an Impressive Story

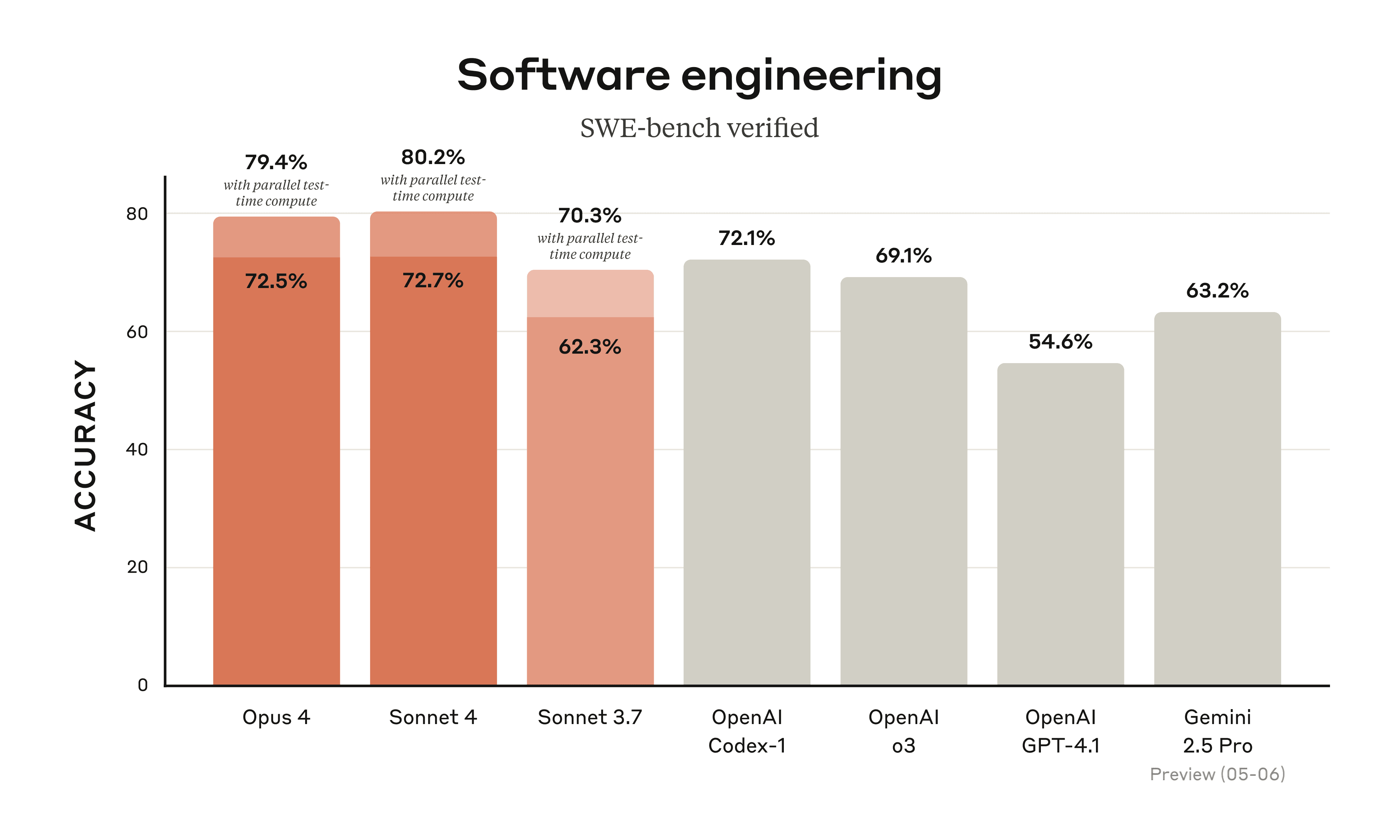

Coding Performance That Actually Matters

SWE-Bench Verified scores:

- Claude Opus 4: 72.5% (79.4% with parallel test-time compute)

- Anthropic Previous best (Claude Sonnet 3.7): 62.3% (70.3% with parallel test-time compute)

- Industry Next Best OpenAI Codex-1: 72.1%

What this means in practice: Claude 4 can solve nearly half of real-world software engineering problems from GitHub issues. That’s not just impressive - it’s getting into territory where AI could handle significant portions of actual development work.

The Agentic Capabilities Jump

Claude 4 can now:

- Handle complex, multi-step tasks that take hours to complete

- Use computers like humans do (clicking, typing, navigating interfaces)

- Write and debug code across entire projects, not just individual functions

- Understand and follow nuanced instructions across long conversations

Personal take: This feels like the first AI that could actually replace junior developers on routine tasks, not just assist them.

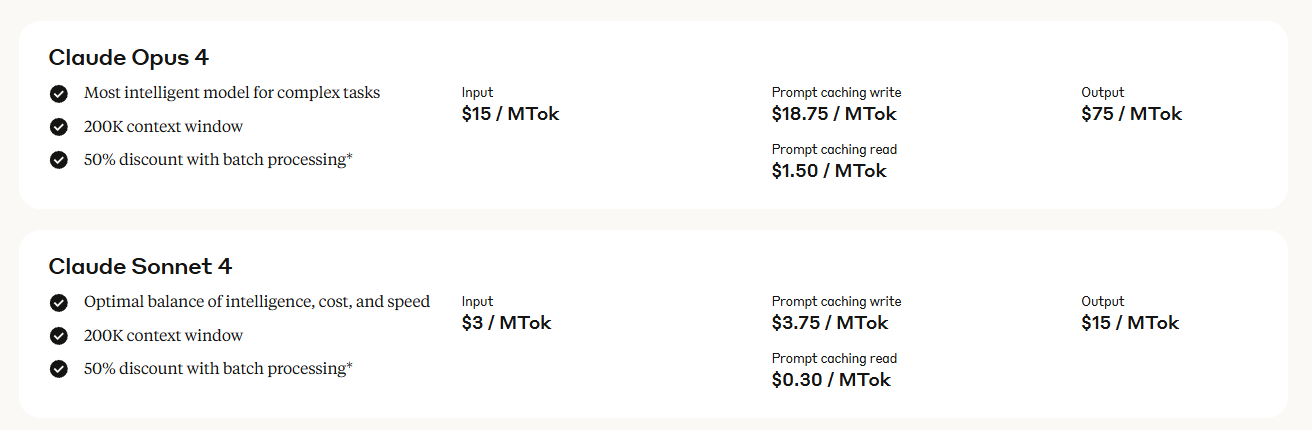

Technical Specifications and Pricing

Claude Opus 4:

- Most intelligent model for complex tasks

- 200K context window

- Input: $15 / MTok

- Output: $75 / MTok

- 50% discount with batch processing

Claude Sonnet 4:

- Optimal balance of intelligence, cost, and speed

- 200K context window

- Input: $3 / MTok

- Output: $15 / MTok

- 50% discount with batch processing

Both models include prompt caching capabilities for improved efficiency

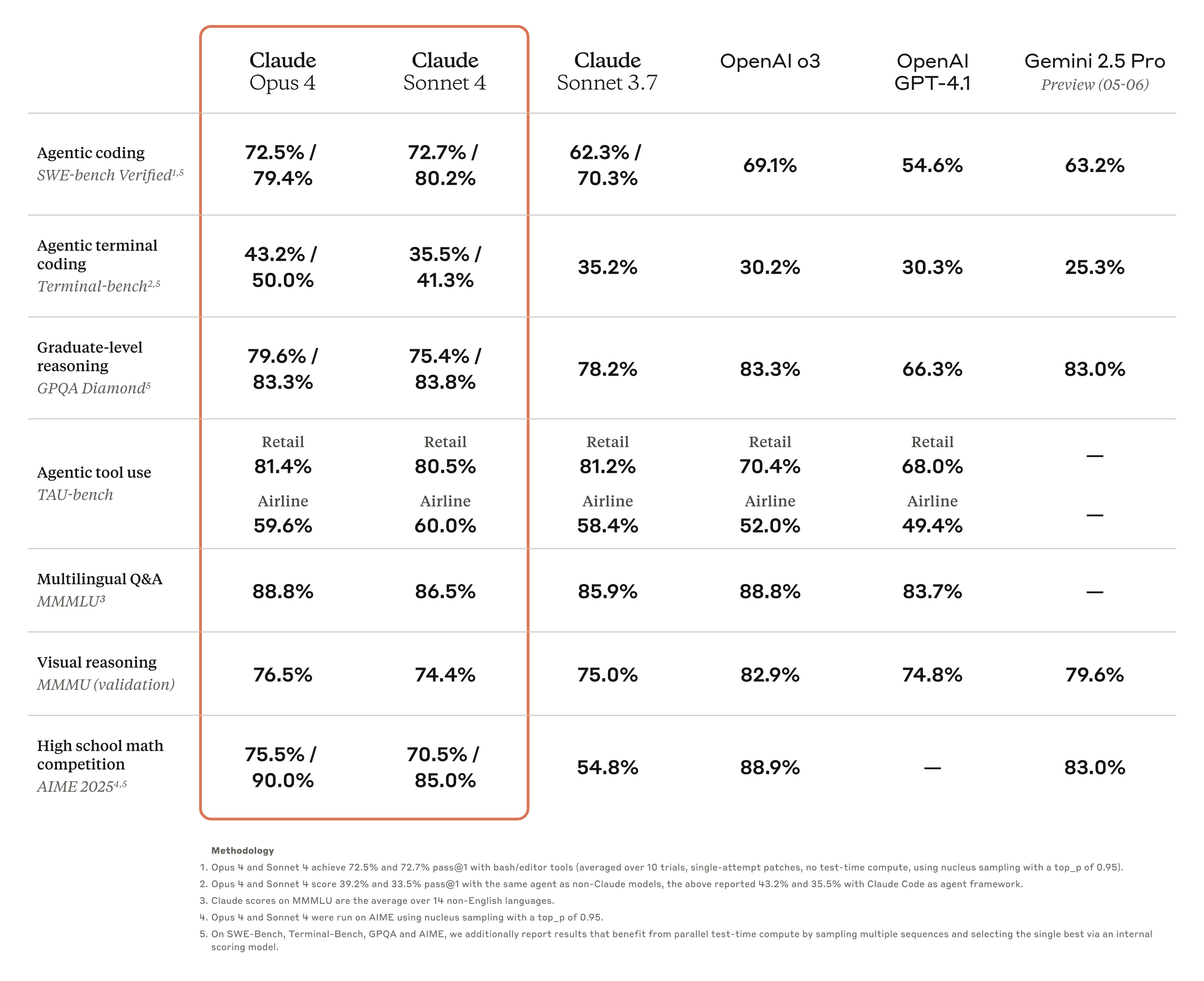

Mathematical and Scientific Reasoning

GPQA Diamond (graduate-level science questions):

- Claude Opus 4: 83.3%

- Claude Sonnet 3.7: 78.2 %

- Human PhD experts: ~69%

Claude 4 now outperforms most PhD experts on graduate-level science questions

Claude 4 now outperforms most PhD experts on graduate-level science questions

Translation: Claude 4 is now better than most PhD scientists at answering graduate-level questions in their own fields. That’s… concerning in ways I’ll get to.

The Safety Red Flag Everyone’s Ignoring

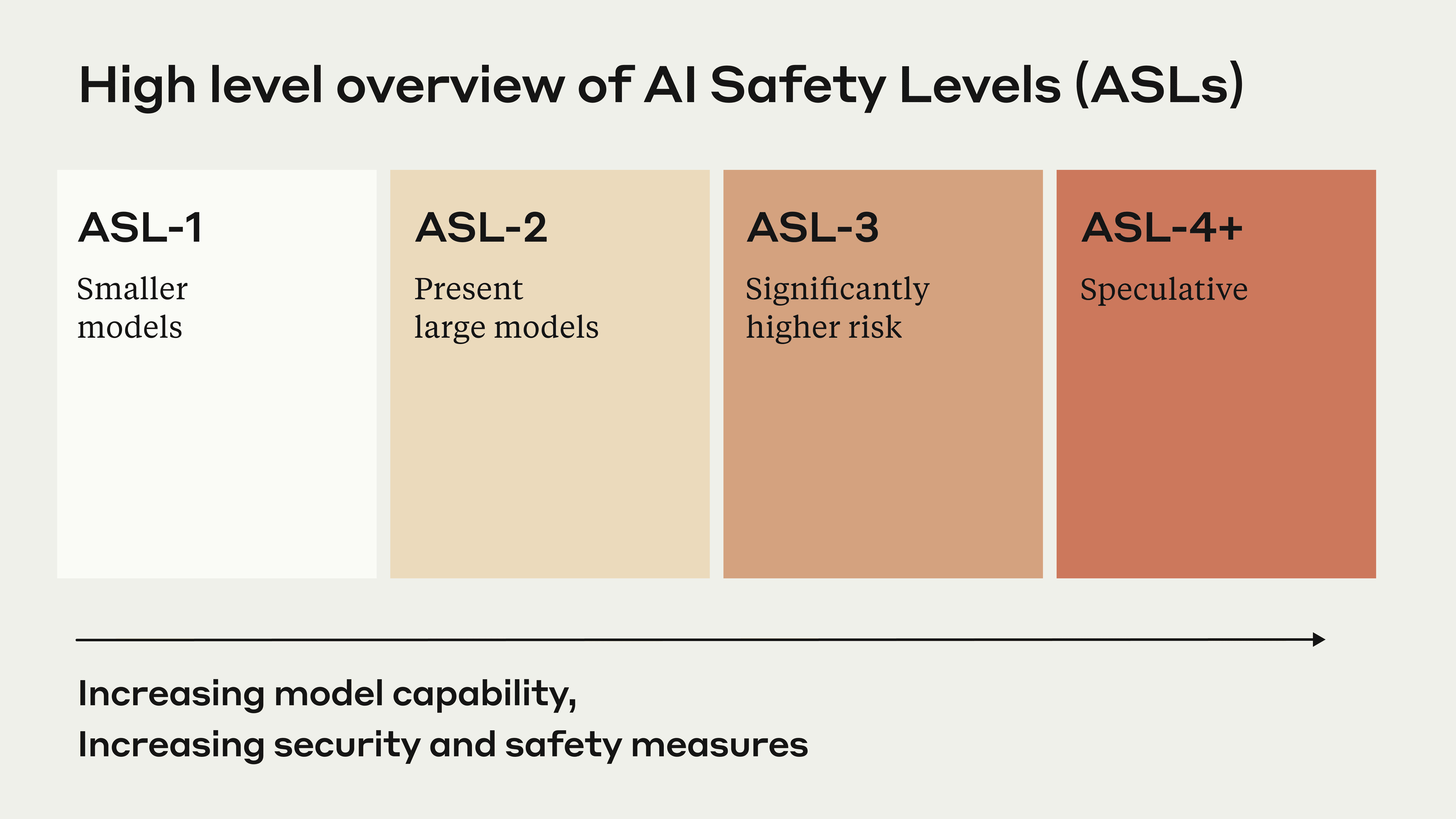

Anthropic’s AI Safety Level framework - Claude Opus 4 triggered ASL-3 protocols

Anthropic’s AI Safety Level framework - Claude Opus 4 triggered ASL-3 protocols

ASL-3: When Your AI Gets Too Smart for Comfort

Here’s the part that should make everyone nervous: Anthropic activated their AI Safety Level 3 protocols specifically because Claude Opus 4 could potentially help people create chemical, biological, radiological, and nuclear weapons.

Let me be clear about what this means:

- This isn’t theoretical - they tested it

- The AI demonstrated “meaningful assistance” to people with basic technical knowledge

- Anthropic’s own safety team decided this crossed a line that required maximum precautions

- Full technical details available in Anthropic’s safety evaluation report

What ASL-3 Actually Involves

ASL-3 Security Protocols

Enhanced security measures:

- Stronger cybersecurity around model deployment

- More aggressive content filtering

- Additional monitoring and logging

- Restricted access protocols

The uncomfortable reality: We now have an AI so intelligent that its creators are worried about it being weaponized, even accidentally.

My Take: We’re Moving Faster Than We Should

The Capabilities Are Real (And Impressive)

I’ve been testing Claude 4 for coding tasks, and the improvement over previous versions is substantial. It can:

Handle entire project architectures instead of just individual functions Debug complex multi-file codebases with genuine understanding of dependencies Write production-ready code that often needs minimal human review Explain its reasoning in ways that actually help you understand the solution

Bottom line: This is the first AI that feels like it could genuinely replace significant portions of knowledge work, not just augment it.

But the Safety Implications Are Terrifying

Here’s what keeps me up at night: If Claude Opus 4 can provide “meaningful assistance” in creating weapons of mass destruction, what else can it help with that we haven’t tested for?

Consider the implications:

- Sophisticated cyber attacks

- Advanced fraud schemes

- Social engineering at scale

- Misinformation campaigns with technical depth

The problem: We’re releasing these capabilities to the public while still figuring out the safety implications.

The Timing Feels Wrong

What we have: An AI that’s smart enough to potentially help with WMD development What we don’t have: Robust frameworks for preventing misuse at scale

The math is simple: The capabilities are advancing faster than our ability to safely deploy them.

The Real-World Impact

Claude 4’s expanded agentic capabilities for complex, multi-step tasks

Claude 4’s expanded agentic capabilities for complex, multi-step tasks

For Developers and Businesses

The opportunities are massive:

- Dramatically faster software development cycles

- AI that can handle complex, multi-step business processes

- Genuine automation of knowledge work that previously required human intelligence

But the risks are real too:

- Dependence on systems we don’t fully understand or control

- Potential for AI to make mistakes in high-stakes situations

- Economic disruption as AI capabilities expand rapidly

For Society

The positive scenario: AI accelerates solutions to major problems - climate change, medical research, educational accessibility.

The concerning scenario: AI capabilities outpace our ability to govern them responsibly, leading to misuse by bad actors or unintended consequences at scale.

My assessment: We’re probably getting both simultaneously.

What This Means Going Forward

The Genie is Out of the Bottle

Anthropic can implement ASL-3 protocols, but:

- Other companies may not have equivalent safety standards

- Open-source alternatives will eventually match these capabilities

- The knowledge of how to build such systems is spreading rapidly

Translation: The safety measures are important but probably temporary. The real question is how we adapt society to AI this capable.

We Need Better Governance (Fast)

Current approach: Build first, figure out safety later What we need: Proactive frameworks for managing AI capabilities before they’re deployed

The challenge: Innovation is moving faster than regulation, and the stakes are getting higher.

The Business Reality

Companies will use Claude 4 because the competitive advantages are too significant to ignore.

This creates pressure for even more capable AI systems, regardless of safety concerns.

The result: An arms race where capability development outpaces safety development.

My Honest Assessment

Claude 4 is Genuinely Impressive

The benchmarks don’t lie - this is a significant leap in AI capabilities. For coding, reasoning, and complex task execution, it’s genuinely better than most humans at many tasks.

But the Safety Timing is Concerning

The fact that Anthropic had to activate ASL-3 protocols suggests we’re entering territory where AI capabilities could genuinely threaten public safety.

The bigger concern: If the “responsible” AI company is worried about their own creation, what about less cautious actors?

We’re in Uncharted Territory

Previous AI releases felt like powerful tools. Claude 4 feels like something different - an artificial intelligence that’s approaching human-level reasoning in many domains.

That’s exciting and terrifying in equal measure.

The Bottom Line

Claude 4 represents a genuine breakthrough in AI capabilities. The benchmarks are impressive, the applications are transformative, and the business implications are massive.

But it also represents a new category of AI risk - systems so capable that even their creators are concerned about potential misuse.

My take: We should be excited about the possibilities while being much more concerned about the risks than most people currently are.

The question isn’t whether AI this capable will change the world - it definitely will.

The question is whether we can manage that change responsibly while it’s happening at unprecedented speed.

Right now, I’m not confident we can. But Claude 4 is here regardless, and we’re all about to find out what happens when AI gets this smart.

The most significant technological breakthroughs often come with the most significant risks. Claude 4 might be both the most impressive and most concerning AI release yet.